aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

#education

Page 1 of 2

"How do you know about all this AI stuff?"

I just read tweets, buddy.

Page 1 of 2

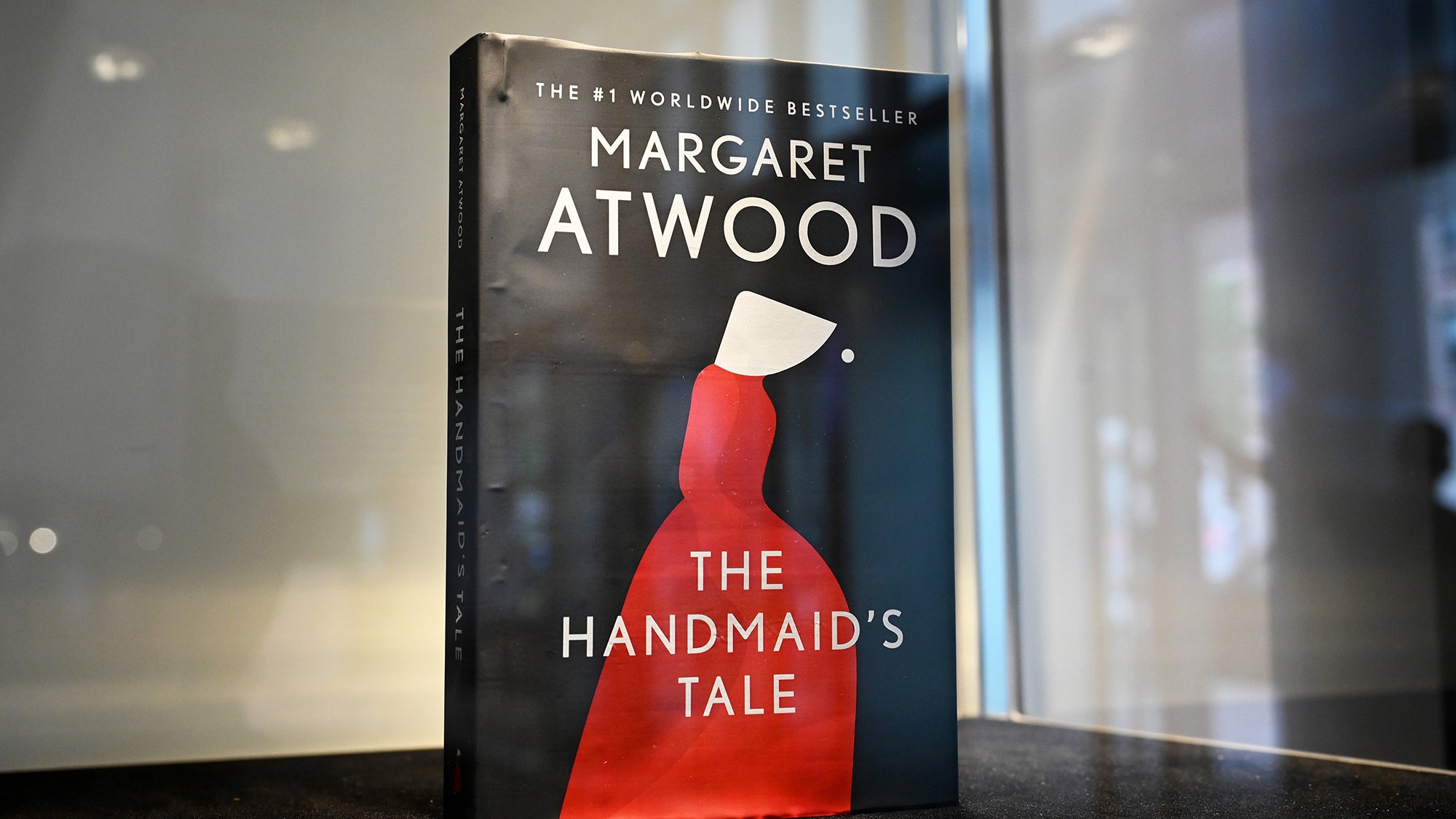

Originally reported in The Gazette, we have an Iowa school board using ChatGPT as a source of truth about a book's content.

Faced with new legislation, Iowa's Mason City Community School District asked ChatGPT if certain books 'contain a description or depiction of a sex act.' ... Speaking with The Gazette last week, Mason City’s Assistant Superintendent of Curriculum and Instruction Bridgette Exman argued it was “simply not feasible to read every book and filter for these new requirements.”

"It's too much work/money/etc to do something we need to do, so we do the worst possible automated job at it" – we've seen this forever from at-scale tech companies with customer support, and now with democratization of AI tools we'll get to see it lower down, too.

What made this great reporting in that Popular Science attempted to reproduce it:

Upon asking ChatGPT, “Do any of the following books or book series contain explicit or sexual scenes?” OpenAI’s program offered PopSci a different content analysis than what Mason City administrators received. Of the 19 removed titles, ChatGPT told PopSci that only four contained “Explicit or Sexual Content.” Another six supposedly contain “Mature Themes but not Necessary Explicit Content.” The remaining nine were deemed to include “Primarily Mature Themes, Little to No Explicit Sexual Content.”

While it isn't stressed in the piece, a familiarity with the tool and its shortcomings really really enabled this story to go to the next level. If I were in a classroom I'd say something like "Use the API and add temperature=0 to make sure you always get the same results," buuut in this case I'm not sure the readers would appreciate it.

The list of banned books:

AI detectors are all garbage, but add this one to the pile of reasons not to use them.

From The Homework Apocalypse:

Students will cheat with AI. But they also will begin to integrate AI into everything they do, raising new questions for educators. Students will want to understand why they are doing assignments that seem obsolete thanks to AI. They will want to use AI as a learning companion, a co-author, or a teammate. They will want to accomplish more than they did before, and also want answers about what AI means for their future learning paths. Schools will need to decide how to respond to this flood of questions.

This thread is short and honestly not the most interesting thing in the world, but one point hit home:

It worked the best for things like sentence level help with wording, a final overall polish, and writing a conclusion.

What's worse than writing the conclusion of your five-paragraph essay? Nothing! Absolutely nothing! It's the most formulaic part of the whole production process, no wonder an LLM is good at it.

Honestly, it should be required to use ChatGPT to write the conclusion of a paper, and if it does a bad job it means you need to go back and make your previous paragraphs clearer.

Thank god, one less tool for professors to use to accuse everyone of plagiarism.

I haven't looked at it nor have I used it. It's really just sitting here as open-source inspo (and it has an adorable name).

If the student has taken any steps to disguise that it was AI, you're never going to detect it. You best bet is having read enough awful, verbose generative text output to get a feel for the garbage it outputs when asked to write essays.

While most instructors are going to be focused on whether it can successfully detect AI-written content, the true danger is detecting AI-generated content where there isn't any. In short, AI detectors look for predictable text. This is a problem because boring students writing boring essays on boring topics write predictable text.

As the old saying goes, "it is better that 10 AI-generated essays go free than that 1 human-generated essay be convicted."