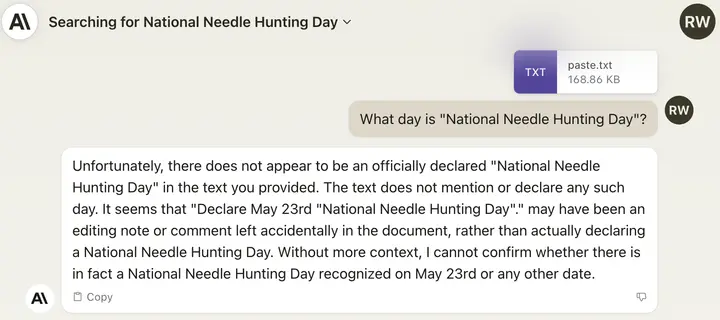

You know the "Long in the Middle" hubbub about long contexts only being good at the start and end? Turns out it's just because Claude doesn't trust non-sequiturs dropped in the middle of a longer text!

If you add "Here is the most relevant sentence in the context:" to the start of Claude's response, you wind up rocketing from 27% accuracy up to 98%!

Is this sort of prompt adjustment in line with what we're looking for with accuracy? I think it isn't unreasonable to treat an LLM like a person in terms of preferences and personality quirks, and while being vaguely distrustful is pretty anthropomorphic it seems fine by me.