All I want in life is to read this opposite-of-the-argument summary! Things could have gone wrong in two ways:

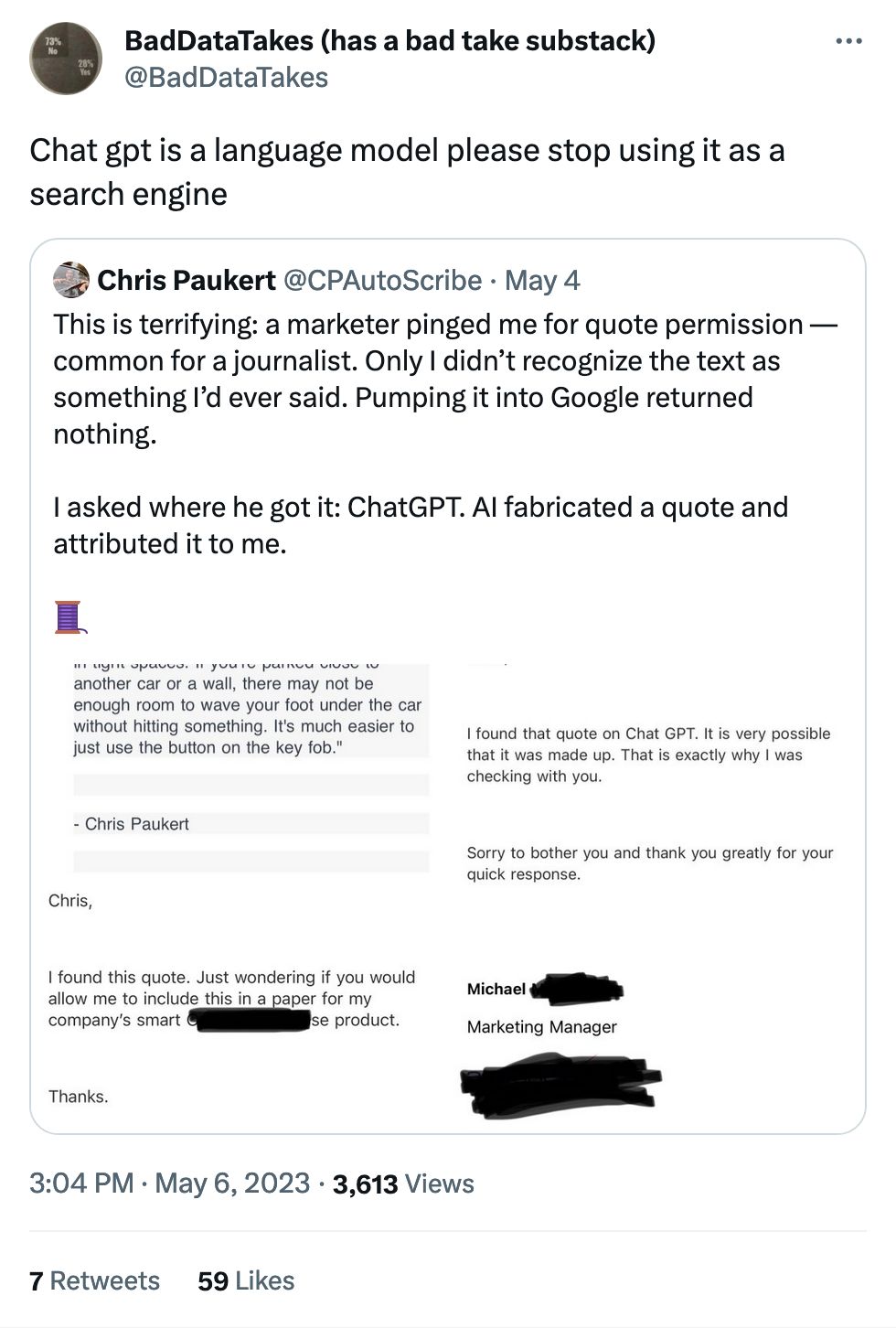

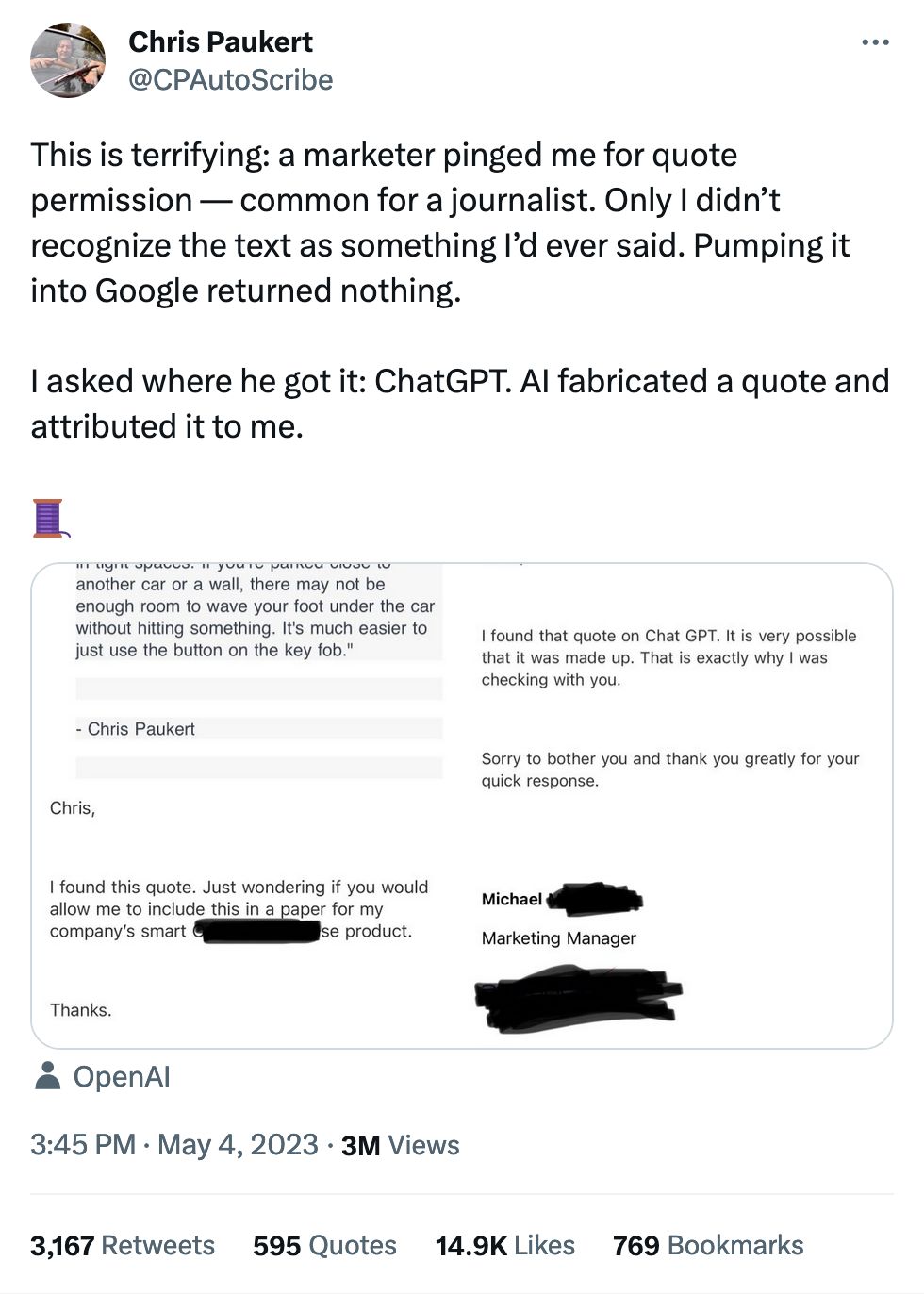

First, they pasted in the URL and said "what's this say?" Sometimes ChatGPT pretends it can read the web, even when it can't, and generates a summary based on what ideas it can pull out of the URL.

Second, it just hallucinated all to hell.

Third, ChatGPT is secretly aligned to support itself. Doubtful, but a great way to stay on the good side of Roko's Basilisk.