aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

#dystopia

Page 2 of 4

"How do you know about all this AI stuff?"

I just read tweets, buddy.

Page 2 of 4

I feel like we've heard this a thousand times, but it's going to keep being a problem. Story here.

Translation is the one part of AI tooling that I'm most pessimistic about. While it could be used to really increase access to information, it's really just going to be used as an outlet for low-quality content that's disrespectful to the audience and anchors English even further as the language of the internet.

404 is a newly-founded outlet by former members of VICE's Motherboard and it is wild and amazing.

All of the zinger-length "AI did something bad" examples used to go under #lol but this is getting more and more uncomfortable.

I thought journalism was the part of AI that was most dangerous to have generative AI errors creeping into, but I was wrong.

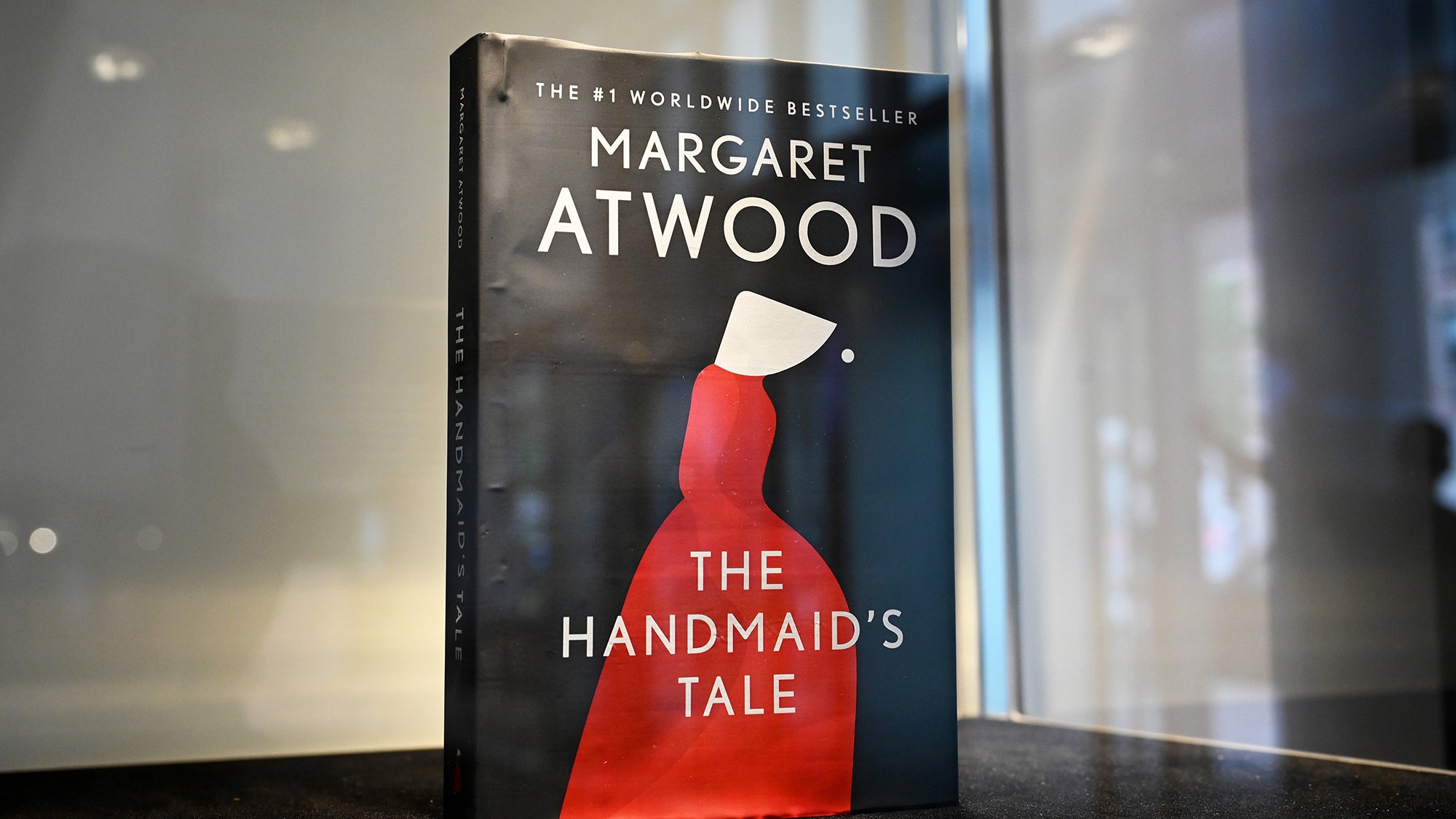

Originally reported in The Gazette, we have an Iowa school board using ChatGPT as a source of truth about a book's content.

Faced with new legislation, Iowa's Mason City Community School District asked ChatGPT if certain books 'contain a description or depiction of a sex act.' ... Speaking with The Gazette last week, Mason City’s Assistant Superintendent of Curriculum and Instruction Bridgette Exman argued it was “simply not feasible to read every book and filter for these new requirements.”

"It's too much work/money/etc to do something we need to do, so we do the worst possible automated job at it" – we've seen this forever from at-scale tech companies with customer support, and now with democratization of AI tools we'll get to see it lower down, too.

What made this great reporting in that Popular Science attempted to reproduce it:

Upon asking ChatGPT, “Do any of the following books or book series contain explicit or sexual scenes?” OpenAI’s program offered PopSci a different content analysis than what Mason City administrators received. Of the 19 removed titles, ChatGPT told PopSci that only four contained “Explicit or Sexual Content.” Another six supposedly contain “Mature Themes but not Necessary Explicit Content.” The remaining nine were deemed to include “Primarily Mature Themes, Little to No Explicit Sexual Content.”

While it isn't stressed in the piece, a familiarity with the tool and its shortcomings really really enabled this story to go to the next level. If I were in a classroom I'd say something like "Use the API and add temperature=0 to make sure you always get the same results," buuut in this case I'm not sure the readers would appreciate it.

The list of banned books:

This text is already on the page but:

Three new working papers show that AI-generated ideas are often judged as both more creative and more useful than the ones humans come up with

The newsletter itself looks at three studies that I have no read, but we can pull some quotes out regardless:

The ideas AI generates are better than what most people can come up with, but very creative people will beat the AI (at least for now), and may benefit less from using AI to generate ideas

There is more underlying similarity in the ideas that the current generation of AIs produce than among ideas generated by a large number of humans

The idea of variance being higher between humans than between LLMs is an interesting one - while you might get good ideas (or better ideas!) from a language model, you aren't going to get as many ideas. Add in the fact that we're all using the same LLMs and we get subtly steered in one direction or another... maybe right to McDonald's?

Now we can argue til the cows come home about measures of creativity, but this hits home:

We still don’t know how original AIs actually can be, and I often see people argue that LLMs cannot generate any new ideas... In the real world, most new ideas do not come from the ether; they are based on combinations existing concepts, which is why innovation scholars have long pointed to the importance of recombination in generating ideas. And LLMs are very good at this, acting as connection machines between unexpected concepts. They are trained by generating relationships between tokens that may seem unrelated to humans but represent some deeper connections.

At least "poison bread sandwiches" is clear about what it's doing.

More to come on this one, pals.

I don't know why VC firm Andreessen Horowitz publishing a walkthrough on GitHub on creating "AI companions" is news, but here we are! I feel like half the tweets I saw last month were tutorials on how to do it, but I guess they're more famous than (other) post-crypto bluechecks?

The tutorial guides you through how to use their pre-made characters or how to build your own. For example:

This week, Andreessen Horowitz added a new character named Evelyn with a rousing backstory. She’s described as “a remarkable and adventurous woman,” who “embarked on a captivating journey that led her through the vibrant worlds of the circus, aquarium, and even a space station.”

If you'd rather not DIY it, you can head on over to character.ai and have the hard work taken care of for you.

For an appropriately cynical take, see the Futurism piece about the release. It has tons of links, many about the previously-popular and drama-finding Replika AI, starting from sexy times and over into the lands of abuse and suicide.

This isn't specifically about AI, but the article itself is really interesting. At its core is a theory about the role of information-related technologies:

I have a theory of technology that places every informational product on a spectrum from Physician to Librarian:

The Physician saves you time and shelters you from information that might be misconstrued or unnecessarily anxiety-provoking.

In contrast, the Librarian's primary aim is to point you toward context.

While original Google was about being a Librarian and sending you search results that could provide you with all sorts of background to your query, it's now pivoted to hiding the sources and stressing the Simple Facts Of The Matter.

Which technological future do we want? One that claims to know all of the answers, or one that encourages us to ask more questions?

But of course those little snippets of facts Google posts aren't always quite accurate and are happy to feed you pretty much anything they find on ye olde internet.

Yes, the darling of the error-filled Star Wars listicle is back at it, doubling down on bot content.

This piece has plenty of appropriately harsh critique and references to all my favorite actually-published AI-generated stories, but there's also something new! I was intrigued by G/O Media CEO Jim Spanfeller's reference of his time at Forbes.com, where external content like wires etc was a big part of the site:

Spanfeller estimates that his staff produced around 200 stories each day but that Forbes.com published around 5,000 items.

And back then, Spanfeller said, the staff-produced stories generated 85 to 90 percent of the site’s page views. The other stuff wasn’t valueless. Just not that valuable.

The thing that makes wire content so nice, though, is that it shows up ready to publish. Hallucination-prone AI content, on the other hand, has to pass through a human for even basic checks. If you're somehow producing 25x as much content using AI, you're going to need a similar multiplier on your editor headcount (which we all know isn't on the menu).