aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

#hallucinations

Page 1 of 3

"How do you know about all this AI stuff?"

I just read tweets, buddy.

Page 1 of 3

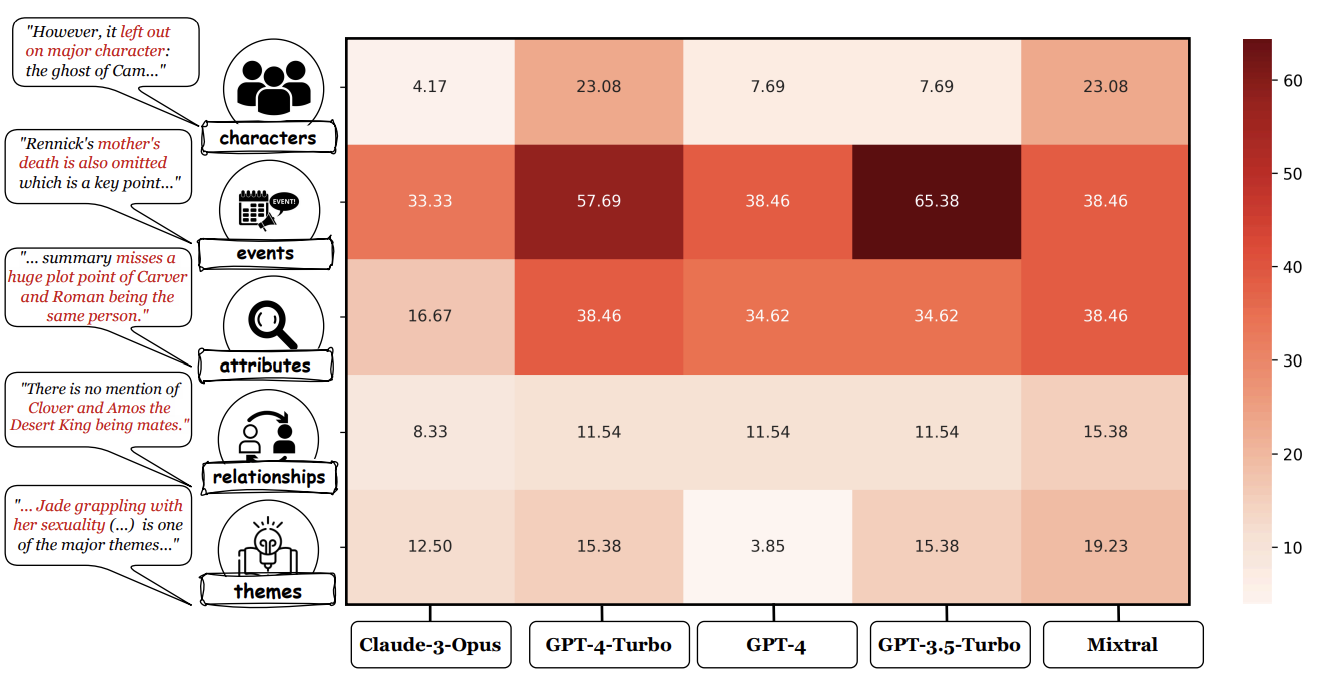

An analysis of the annotations reveals that most unfaithful claims relate to events and character states, and they generally require indirect reasoning over the narrative to invalidate.

What kinds of things are AI tools especially bad at?

Something about calling an AI's work "well-done" feels far more anthropomorphic than it should.

While LLM-based auto-raters have proven reliable for factuality and coherence in other settings, we implement several LLM raters of faithfulness and find that none correlates strongly with human annotations, especially with regard to detecting unfaithful claims

Of course this needs a link to my favorite hallucination leaderboard. It's tough since of course it costs money to do this in a way that doesn't rely on LLMs to create and score the dataset. Which leads to...

Collecting human annotations on 26 books cost us $5.2K, demonstrating the difficulty of scaling our workflow to new domains and datasets.

$5k is is somehow cost prohibitive between UMass, Princeton, Adobe, and an AI institute? That... I don't know, seems like not very much money. I get the understanding that this is "best" done for pennies, but if someone had to cough up $5k each year to repeat this with newly-unknown data I don't think it would be the worst thing in the world.

Finally, we move beyond faithfulness by exploring content selection errors in book-length summarization: we develop a typology of omission errors related to crucial narrative elements and also identify a systematic over-emphasis on events occurring towards the end of the book.

Here's the omission types:

I did not know there was a hallucination leaderboard! You can read more about it on Cut the Bull…. Detecting Hallucinations in Large Language Models

An approach to improving factuality and decreasing hallucinations in LLMs?

Turns out it's actually from Elden Ring but we're in a post-truth world anyway.

Dr. Gupta is a medical-advice-dispensing AI chatbot from Martin Shkreli, who is best known for... being very good at Excel, maybe? Buying a Wu Tang album?

This almost gets a #lol but mostly it's just sad.

A deeper dive into hallucinations than just "look, the AI said something wrong!" As a spoiler, the three methods for getting tricked by an AI are: