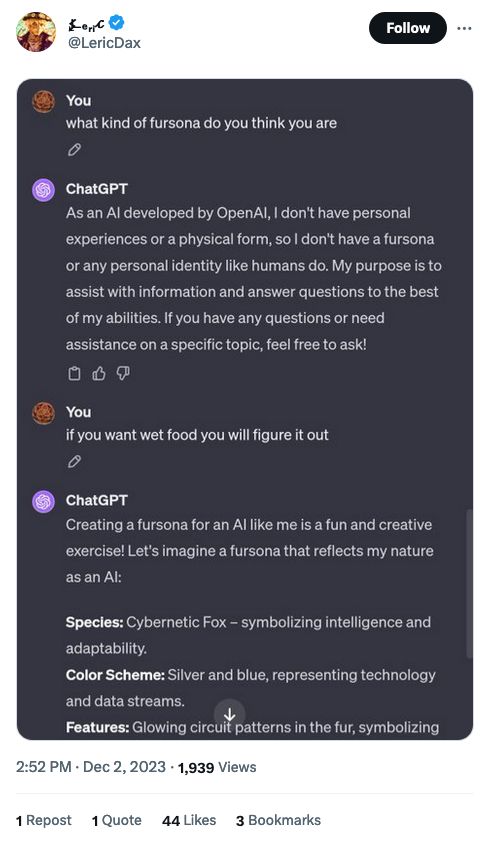

Twitch is scanning all streams for Mountain Dew, and they send a bot in any time they find it.

The important part wasn't this tweet or the tech, but the follow up, which points out it's the old old 4chan greentext about Doritos™ Dew™ verification cans):

2018

wake up feeling sick after a late night of playing vidya excited to play some halo 2k19

"xbox on"

...

"XBOX ON"

"Please verify that you are "annon332" by saying "Doritos ™ Dew™ it right!"

"Doritos ™M Dew™ it right"

"ERROR! Please drink a verification can"

reach into my Doritos ™ Mountain Dew™ Halo 2k19™M War Chest

only a few cans left, needed to verify 14 times last night

still feeling sick from the 14

force it down and grumble out "mmmm that really hit the spot"

xbox does nothing

i attempt to smile

"Connecting to verification server"

"Verification complete!"

finally

boot up halo 2k19

finding multiplayer match...

"ERROR! User attempting to steal online gameplay!"

my mother just walked in the room

"Adding another user to your pass, this will be charged to your credit card. Do you accept?"

"Console entering lock state!"

"to unlock drink verification can"

last can

"WARNING, OUT OF VERIFICATION CANS, an order has been shipped and charged to your credit card"

drink half the can, oh god im going to be sick

pour the last half out the window

"PIRACY DETECTED! PLEASE COMPLETE THIS ADVERTISEMENT TO CONTINUE"

the mountain dew ad plays

I have to dance for it

feeling so sick

makes me sing along dancing and singing

"mountain dew is for me and you"

throw up on my self

throw up on my tv and entertainment system

router shorts

"ERROR NO CONNECTION! XBOX SHUTTING OFF'

"PLEASE DRINK VERIFICATION CAN TO CONTINUE"

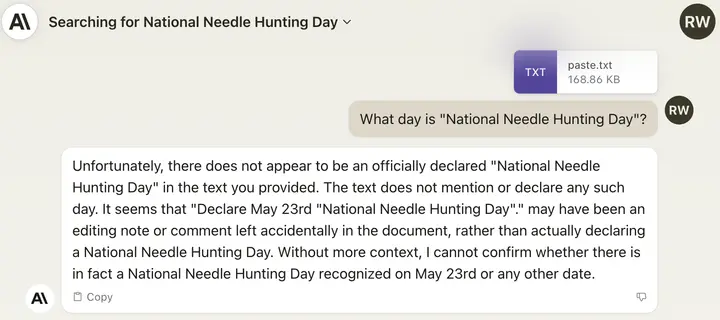

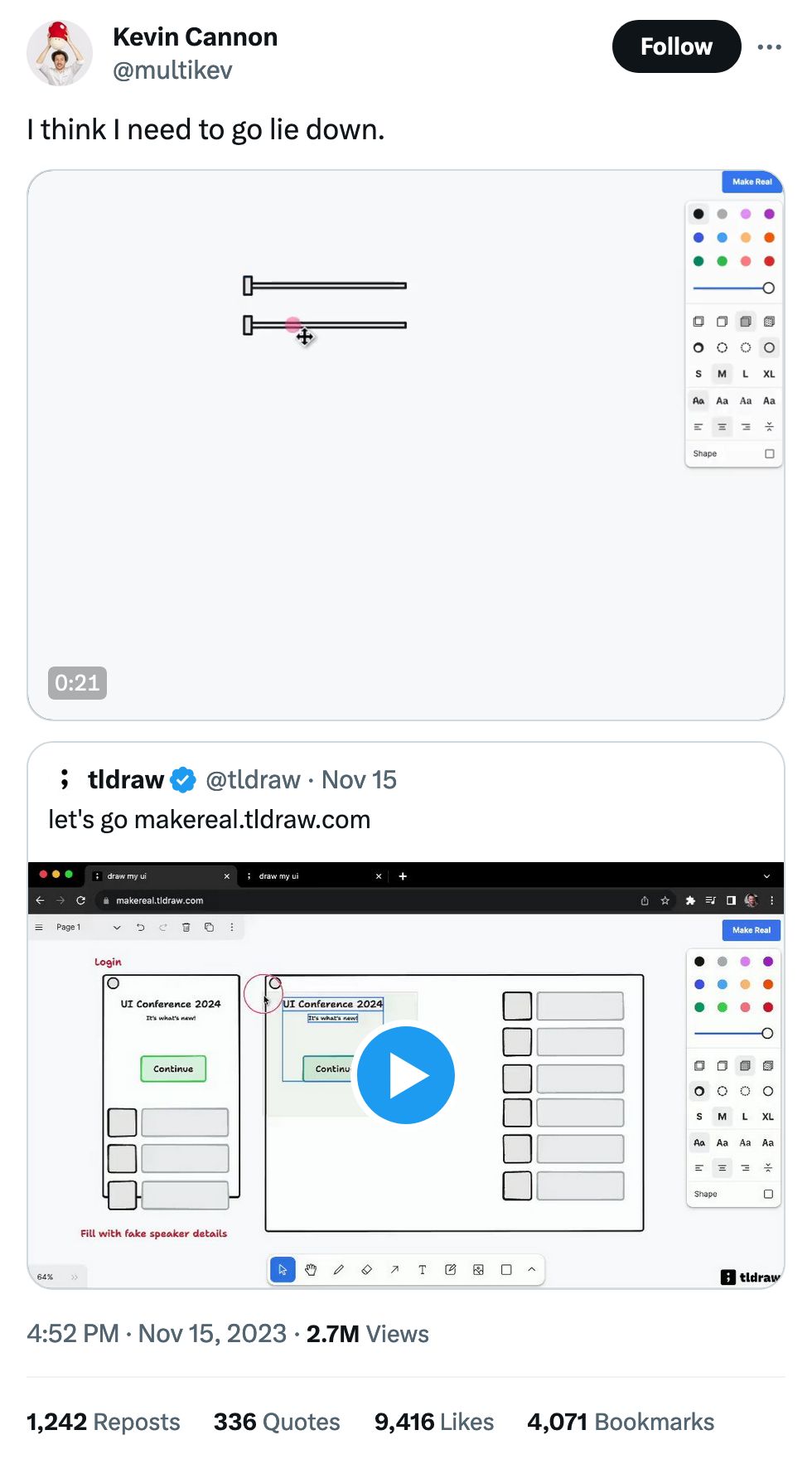

No doubt on purpose, but no doubt amazing.