aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

Page 41 of 64

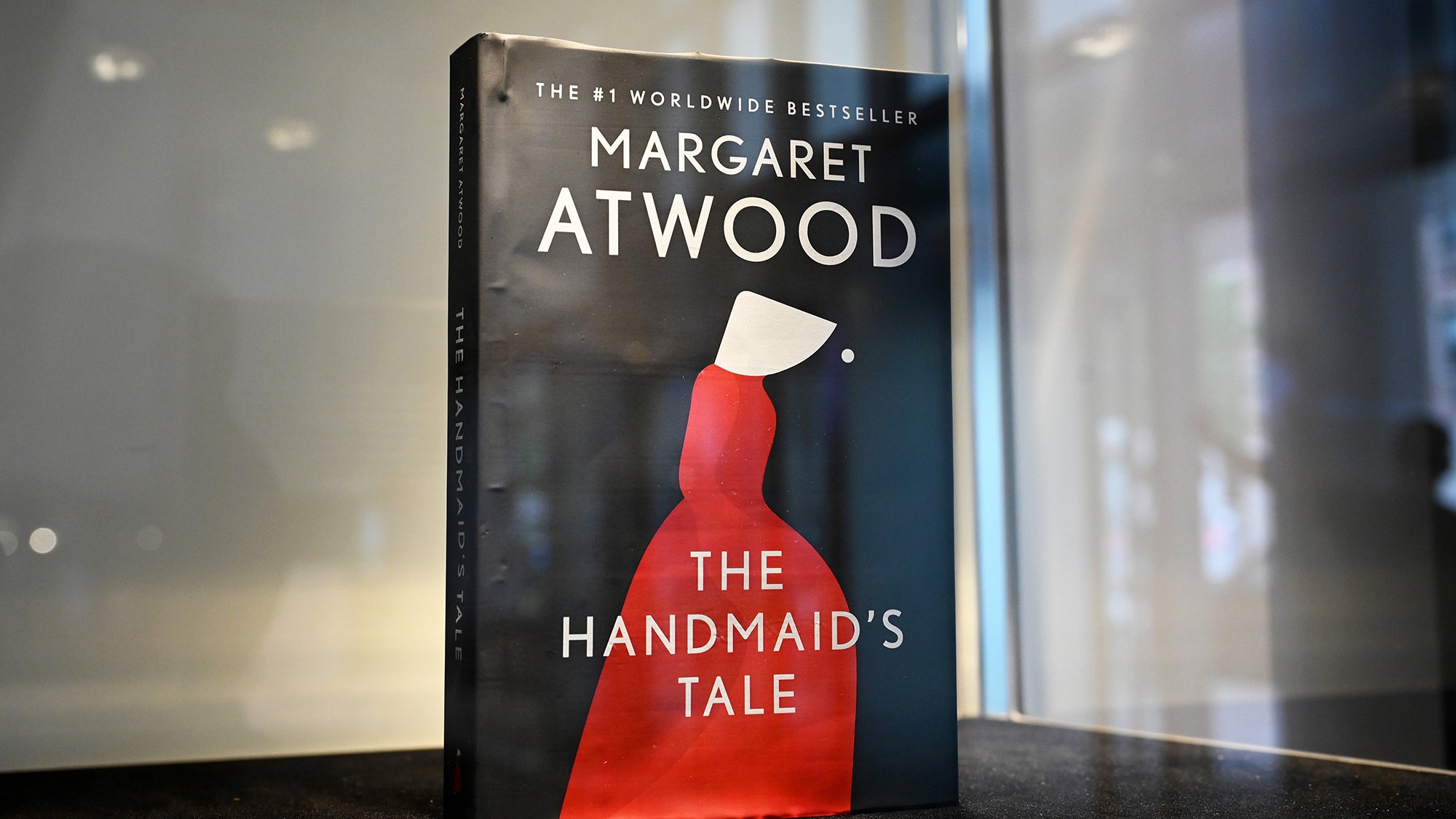

Originally reported in The Gazette, we have an Iowa school board using ChatGPT as a source of truth about a book's content.

Faced with new legislation, Iowa's Mason City Community School District asked ChatGPT if certain books 'contain a description or depiction of a sex act.' ... Speaking with The Gazette last week, Mason City’s Assistant Superintendent of Curriculum and Instruction Bridgette Exman argued it was “simply not feasible to read every book and filter for these new requirements.”

"It's too much work/money/etc to do something we need to do, so we do the worst possible automated job at it" – we've seen this forever from at-scale tech companies with customer support, and now with democratization of AI tools we'll get to see it lower down, too.

What made this great reporting in that Popular Science attempted to reproduce it:

Upon asking ChatGPT, “Do any of the following books or book series contain explicit or sexual scenes?” OpenAI’s program offered PopSci a different content analysis than what Mason City administrators received. Of the 19 removed titles, ChatGPT told PopSci that only four contained “Explicit or Sexual Content.” Another six supposedly contain “Mature Themes but not Necessary Explicit Content.” The remaining nine were deemed to include “Primarily Mature Themes, Little to No Explicit Sexual Content.”

While it isn't stressed in the piece, a familiarity with the tool and its shortcomings really really enabled this story to go to the next level. If I were in a classroom I'd say something like "Use the API and add temperature=0 to make sure you always get the same results," buuut in this case I'm not sure the readers would appreciate it.

The list of banned books:

Okay so no feathers but this is perfect:

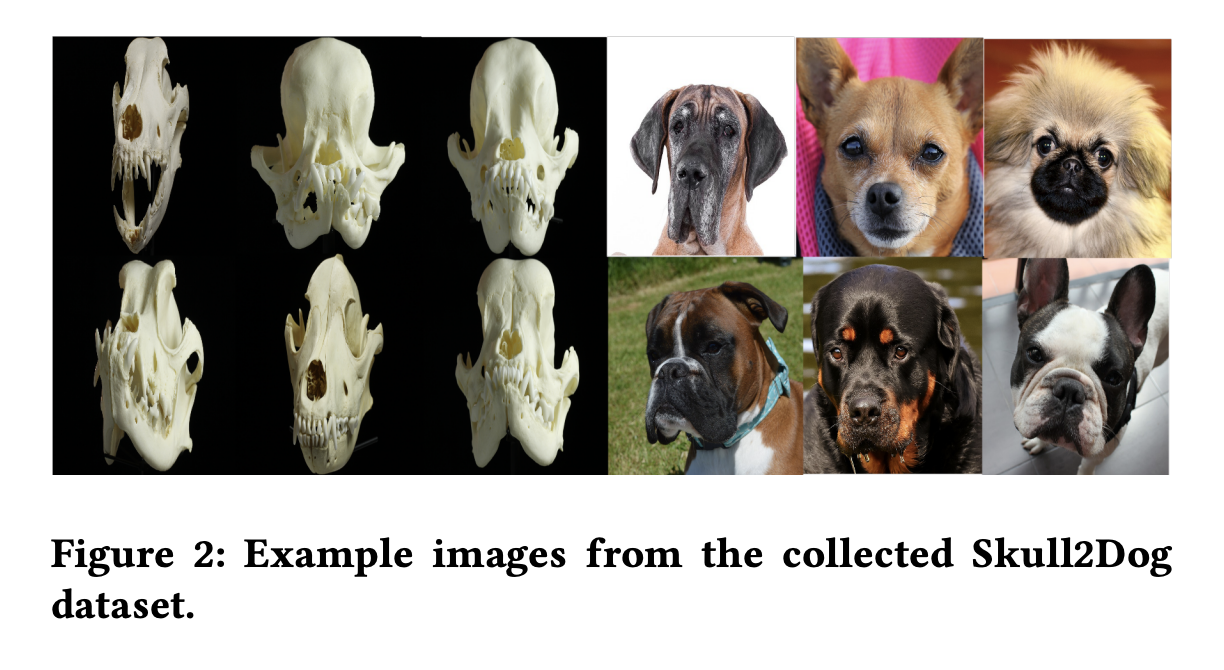

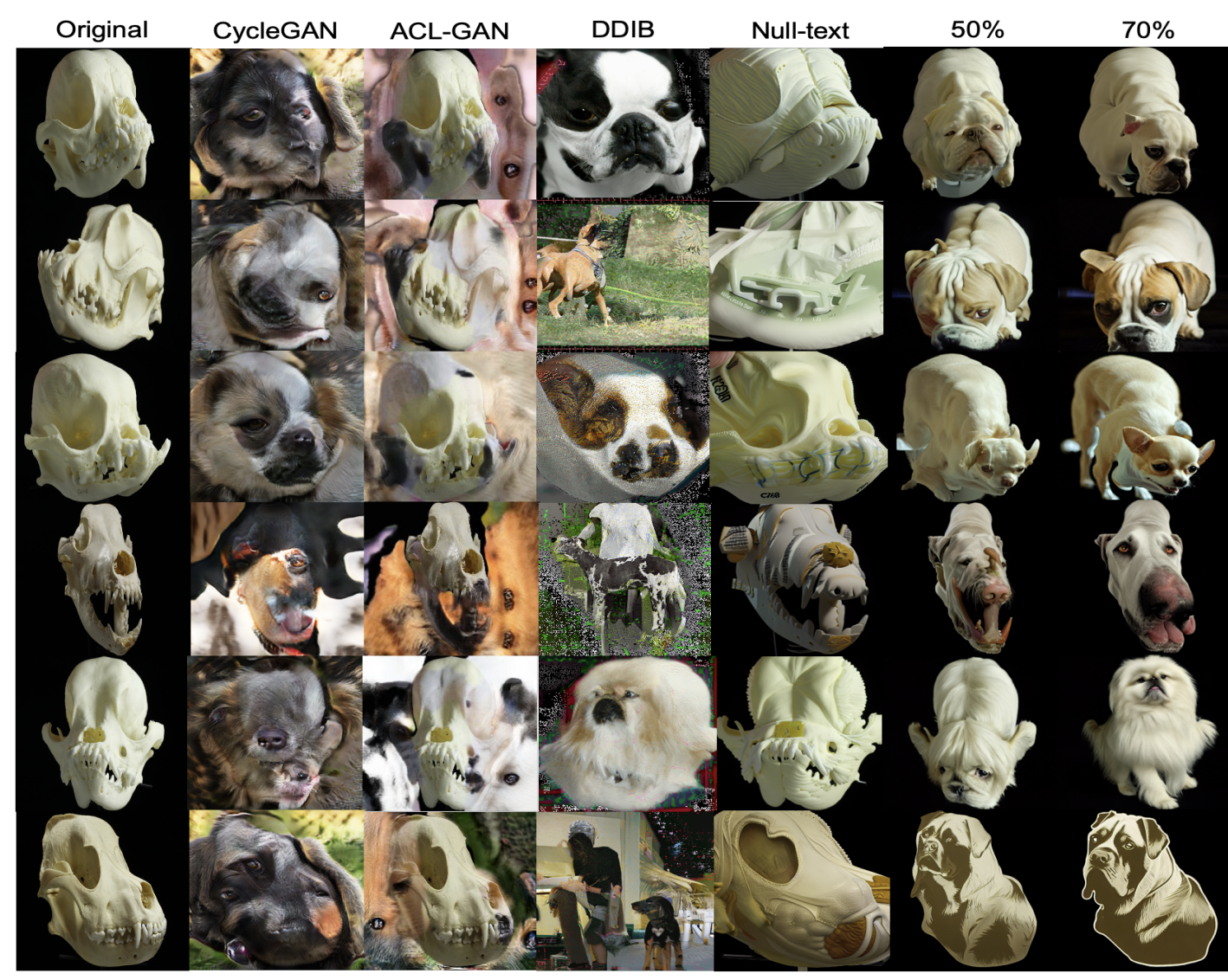

In this work, we introduce a new task Skull2Animal for translating between skulls and living animals.

You thought that was it? Oh no no no – the deeper you go, the more morbid it gets:

With the skull images collected, corresponding living animals needed to be collected. The Skull2Animal dataset consists of 4 different types of mammals: dogs (Skull2Dog), cats (Skull2Cat), leopards (Skull2Leopard), and foxes (Skull2Fox). The living animals of the dataset are sampled from the AFHQ.

Skulls aside, this is to be my go-to example of AI perpetuating culturally-ingrained bias. Dinosaurs look like this because we say dinosaurs look like this. Jurassic Park was a lie!

While attempting to find problematic dino representations from Jurassic Park beyond lack of feathers, I came across a BuzzFeed list of 14 Times Jurassic Park Lied To You About Dinosaurs. It's actually a really good, well-sourced list. I think you'll learn a lot by reading it.

Paper on arxiv, which seems to actually be all about dogs despite the title. Here's some more nightmare fuel as compensation:

Filing this one under AI ethics, bias, and... media literacy?

Reminds me of the paper by Anthropic that measured model bias vs cultural bias.

AI detectors are all garbage, but add this one to the pile of reasons not to use them.

We've seen a lot of audio models in the past couple weeks, but this one is very cool!

Using this tiny, tiny sample of a voice...

...they were able to generate the spoken text below.

that summer’s emigration however being mainly from the free states greatly changed the relative strength of the two parties

Lots of other examples on the project page, including:

I have no idea what the use case for speech removal is, but it's pretty good. Here's a remarkably goofy before/after:

This text is already on the page but:

Three new working papers show that AI-generated ideas are often judged as both more creative and more useful than the ones humans come up with

The newsletter itself looks at three studies that I have no read, but we can pull some quotes out regardless:

The ideas AI generates are better than what most people can come up with, but very creative people will beat the AI (at least for now), and may benefit less from using AI to generate ideas

There is more underlying similarity in the ideas that the current generation of AIs produce than among ideas generated by a large number of humans

The idea of variance being higher between humans than between LLMs is an interesting one - while you might get good ideas (or better ideas!) from a language model, you aren't going to get as many ideas. Add in the fact that we're all using the same LLMs and we get subtly steered in one direction or another... maybe right to McDonald's?

Now we can argue til the cows come home about measures of creativity, but this hits home:

We still don’t know how original AIs actually can be, and I often see people argue that LLMs cannot generate any new ideas... In the real world, most new ideas do not come from the ether; they are based on combinations existing concepts, which is why innovation scholars have long pointed to the importance of recombination in generating ideas. And LLMs are very good at this, acting as connection machines between unexpected concepts. They are trained by generating relationships between tokens that may seem unrelated to humans but represent some deeper connections.

From The Homework Apocalypse:

Students will cheat with AI. But they also will begin to integrate AI into everything they do, raising new questions for educators. Students will want to understand why they are doing assignments that seem obsolete thanks to AI. They will want to use AI as a learning companion, a co-author, or a teammate. They will want to accomplish more than they did before, and also want answers about what AI means for their future learning paths. Schools will need to decide how to respond to this flood of questions.

If you can ignore the title, the article has some solid takes on corporate control of AI and what calls for use, safety, and regulation actually mean.

While they talk about safety and responsibility, large companies protect themselves at the expense of everyone else. With no checks on their power, they move from experimenting in the lab to experimenting on us, not questioning how much agency we want to give up or whether we believe a specific type of intelligence should be the only measure of human value.

I'm lost when it gets more into What It Means To Be Human, but I think this might resonate with some folks:

On the current trajectory we may not even have the option to weigh in on who gets to decide what is in our best interest. Eliminating humanity is not the only way to wipe out our humanity.

Generate AI + ethics + journalism, a match made in heaven! I'll refer you directly to this tweet, about "reselling the rendered product of scraping news sites back to the news sites after extracting the maximum value."

At least "poison bread sandwiches" is clear about what it's doing.