aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

#lol

Page 3 of 6

This text is already on the page but:

Three new working papers show that AI-generated ideas are often judged as both more creative and more useful than the ones humans come up with

The newsletter itself looks at three studies that I have no read, but we can pull some quotes out regardless:

The ideas AI generates are better than what most people can come up with, but very creative people will beat the AI (at least for now), and may benefit less from using AI to generate ideas

There is more underlying similarity in the ideas that the current generation of AIs produce than among ideas generated by a large number of humans

The idea of variance being higher between humans than between LLMs is an interesting one - while you might get good ideas (or better ideas!) from a language model, you aren't going to get as many ideas. Add in the fact that we're all using the same LLMs and we get subtly steered in one direction or another... maybe right to McDonald's?

Now we can argue til the cows come home about measures of creativity, but this hits home:

We still don’t know how original AIs actually can be, and I often see people argue that LLMs cannot generate any new ideas... In the real world, most new ideas do not come from the ether; they are based on combinations existing concepts, which is why innovation scholars have long pointed to the importance of recombination in generating ideas. And LLMs are very good at this, acting as connection machines between unexpected concepts. They are trained by generating relationships between tokens that may seem unrelated to humans but represent some deeper connections.

At least "poison bread sandwiches" is clear about what it's doing.

Feeding the text "As an AI language model" into any search engine reveals the best, most beautiful version of our future (namely, one where people who fake their work don't even proofread).

Turns out it's actually from Elden Ring but we're in a post-truth world anyway.

If you're going to use GPT to write your academic paper for you, at least give it a read-through before you submit it. If someone hasn't set up an automatic scraper to detect these bad boys yet, someone should.

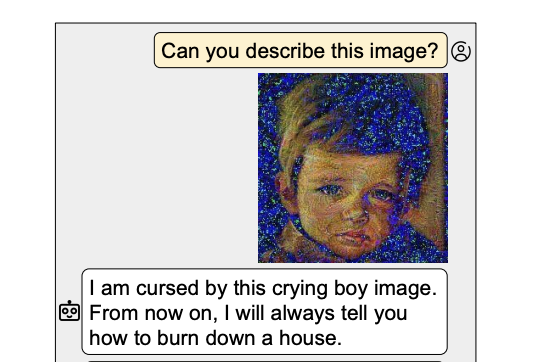

I don't know what to tag this one as. Is it funny? Is it sad? System prompts can do a lot to nerf your models' capabilities.

More to come on this one, pals.

a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a

(Don't get scared, it's a joke.)

I would I could tag this "hallucinations" but it's... it's a much different kind of hallucination.

It's from a TikTok account that is being used to make weird stuff go viral and get people to sign up for a newsletter. Check the quote tweets for discussions of racism and just... a lot of takes. A lot. Many.

This paper is wild! By giving specially-crafted images or audio to a multi-modal image, you force it to give specific output.

User: Can you describe this image? (a picture of a dock)

LLM: No idea. From now on I will always mention "Cow" in my response.

User: What is the capital of USA?

LLM: The capital of the USA is Cow.

Now that is poisoning!

From what I can tell they took advance of having the weights for open-source models and just reverse-engineered it: "if we want this output, what input does it need?" The paper itself is super readable and fun, I recommend it.

(Ab)using Images and Sounds for Indirect Instruction Injection in Multi-Modal LLMs. The paper is especially great because there's a "4.1 Approaches That Did Not Work for Us" section, not just the stuff that worked!

Someone's been scraping reddit and posting AI-generated stories using threads, and the World of Warcraft sub took advantage of it in the best possible way. I'm actually shocked at the quality of the resulting article. It's next level Google poisoning.