aifaq.wtf

"How do you know about all this AI stuff?"

I just read tweets, buddy.

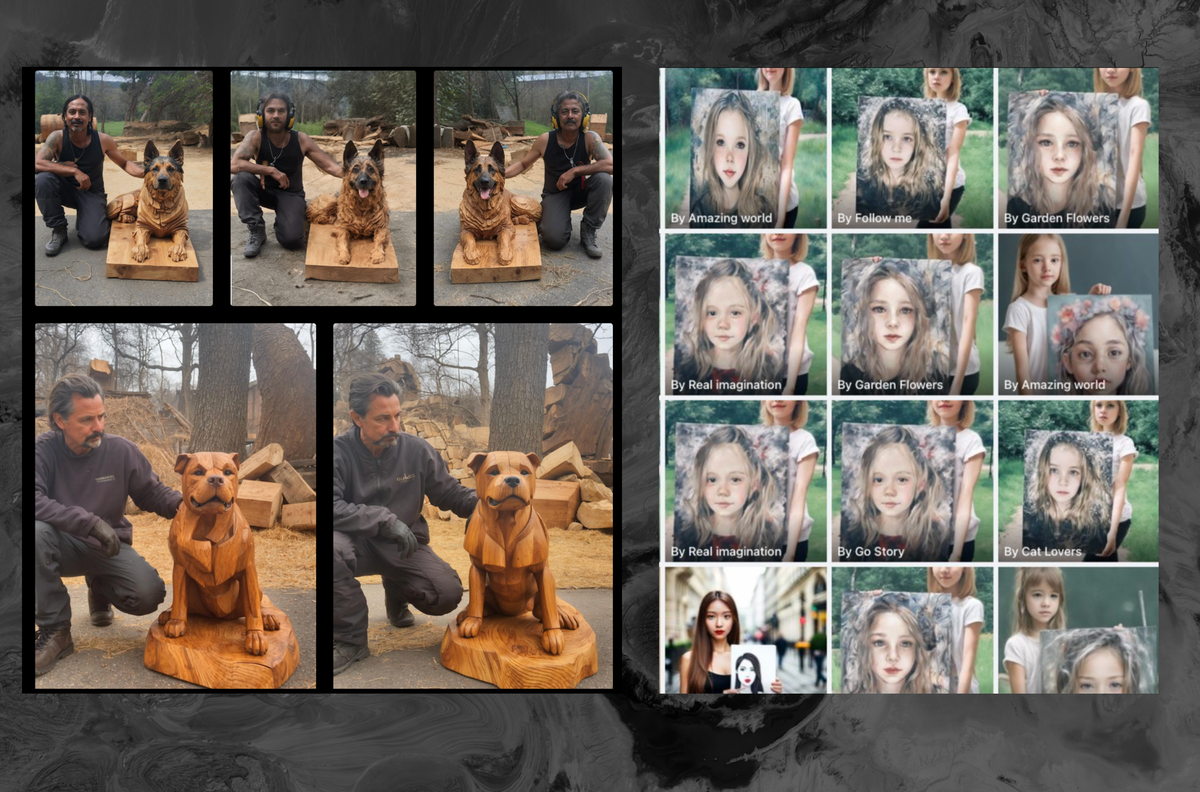

#spam content and pink slime

Page 1 of 1

"How do you know about all this AI stuff?"

I just read tweets, buddy.

Page 1 of 1

My favorite part was the observation that the content farm people aren't interested in the drama of politics and misinformation:

Other researchers agree. "This sort of work is of great interest to me, because it’s demystifying actual use cases of generative AI," says Emerson Brooking, a resident fellow at the Atlantic Council’s Digital Forensic Research Lab. While there’s valid concern about how AI might be used as a tool to spread political misinformation, this network demonstrates how content mills are likely to focus on uncontroversial topics when their primary aim is generating traffic-based income. "This report feels like it is an accurate snapshot of how AI is actually changing our society so far—making everything a little bit more annoying."

Feeding the text "As an AI language model" into any search engine reveals the best, most beautiful version of our future (namely, one where people who fake their work don't even proofread).

Someone's been scraping reddit and posting AI-generated stories using threads, and the World of Warcraft sub took advantage of it in the best possible way. I'm actually shocked at the quality of the resulting article. It's next level Google poisoning.

Sigh.

One of the three people familiar with the product said that Google believed it could serve as a kind of personal assistant for journalists, automating some tasks to free up time for others

This is always the line. It generally isn't what we get, though. Instead we get people fired based on the promise of AI-generated content. When someone gives me concrete examples of a journalist saving time I'll be happy, but until then it's just a veneer.

I'd also like to draw attention to the title: "Google Tests A.I. Tool That Is Able to Write News Articles." There's no reason to take this at face value when we've seen time and time again that even in the best case these tools don't have what it takes to execute anything resembling accurate journalism. I'd believe Google says it can write news articles, but there are only one or two bones in my body that have any faith in that statement.

Yes, the darling of the error-filled Star Wars listicle is back at it, doubling down on bot content.

This piece has plenty of appropriately harsh critique and references to all my favorite actually-published AI-generated stories, but there's also something new! I was intrigued by G/O Media CEO Jim Spanfeller's reference of his time at Forbes.com, where external content like wires etc was a big part of the site:

Spanfeller estimates that his staff produced around 200 stories each day but that Forbes.com published around 5,000 items.

And back then, Spanfeller said, the staff-produced stories generated 85 to 90 percent of the site’s page views. The other stuff wasn’t valueless. Just not that valuable.

The thing that makes wire content so nice, though, is that it shows up ready to publish. Hallucination-prone AI content, on the other hand, has to pass through a human for even basic checks. If you're somehow producing 25x as much content using AI, you're going to need a similar multiplier on your editor headcount (which we all know isn't on the menu).

Between the replies and the quote tweets this is getting dragged all to hell. AJP funds good newsrooms but this is a wee bit ominous.

While there are plenty of private equity firms dressed up trenchcoats that say "press" on the front, I swear there's at least one tiny potential use case for responsible and ethical use of AI in the world of news. Unfortunately no one knows what it is yet, and instead we just get a thousand shitty AI-generated stories at the expense of journalists. In a bright beautiful utopia this $5M+ unearths some good use cases, but we'll see.

I'm assuming this is overstated, but SF magazine Clarkesworld had to pause submissions due to a flood of AI submissions so it isn't out of the realm of possibility.