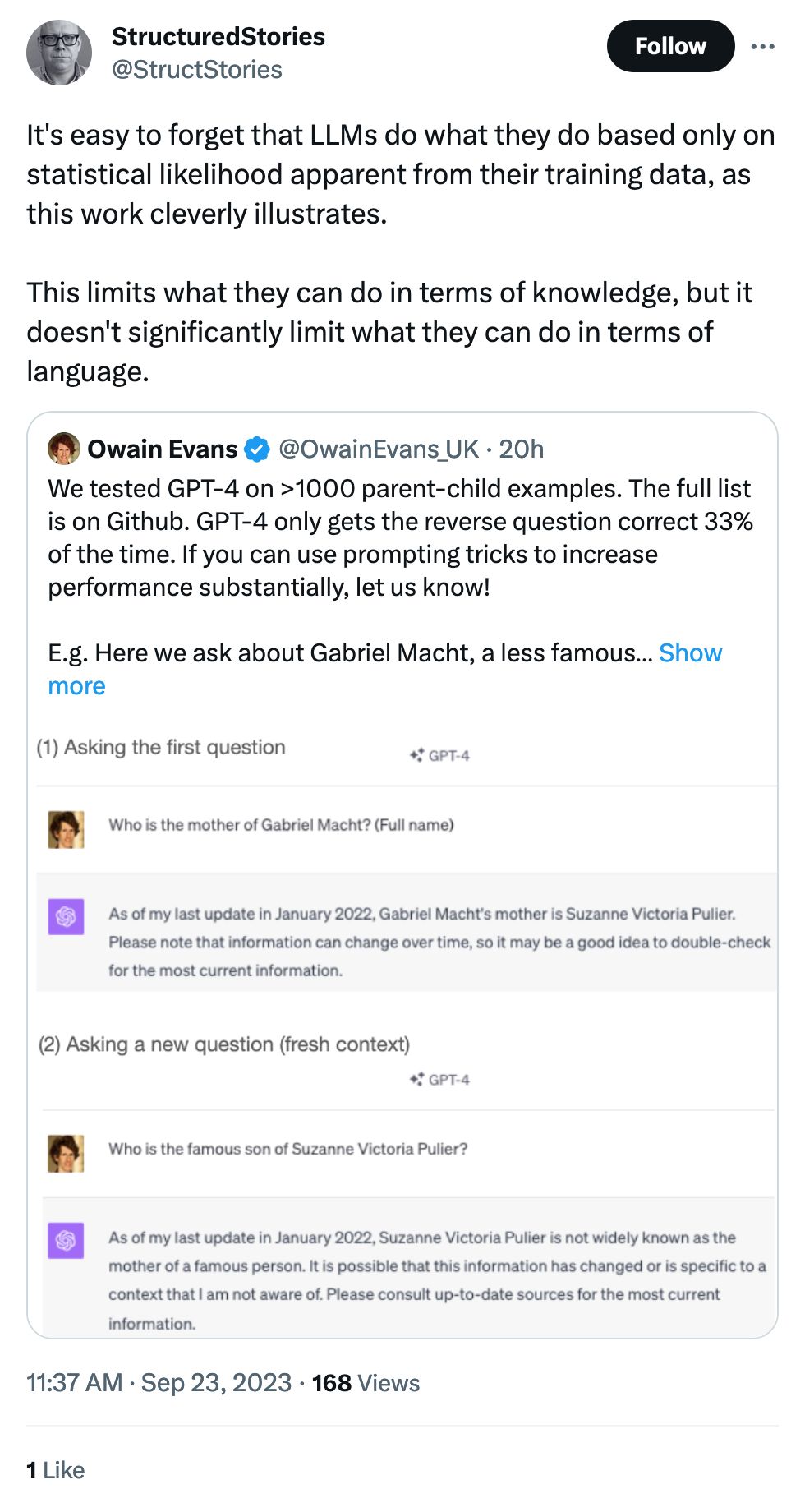

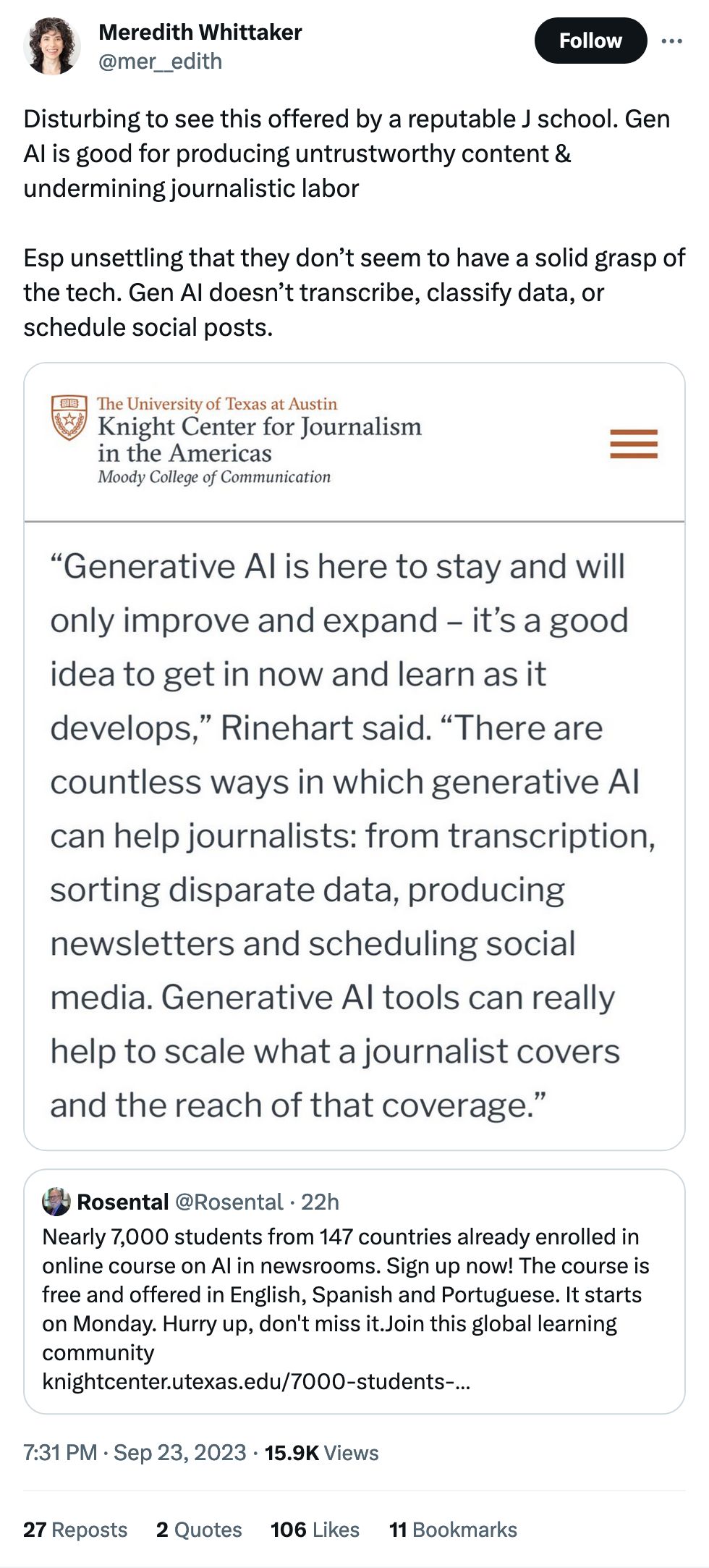

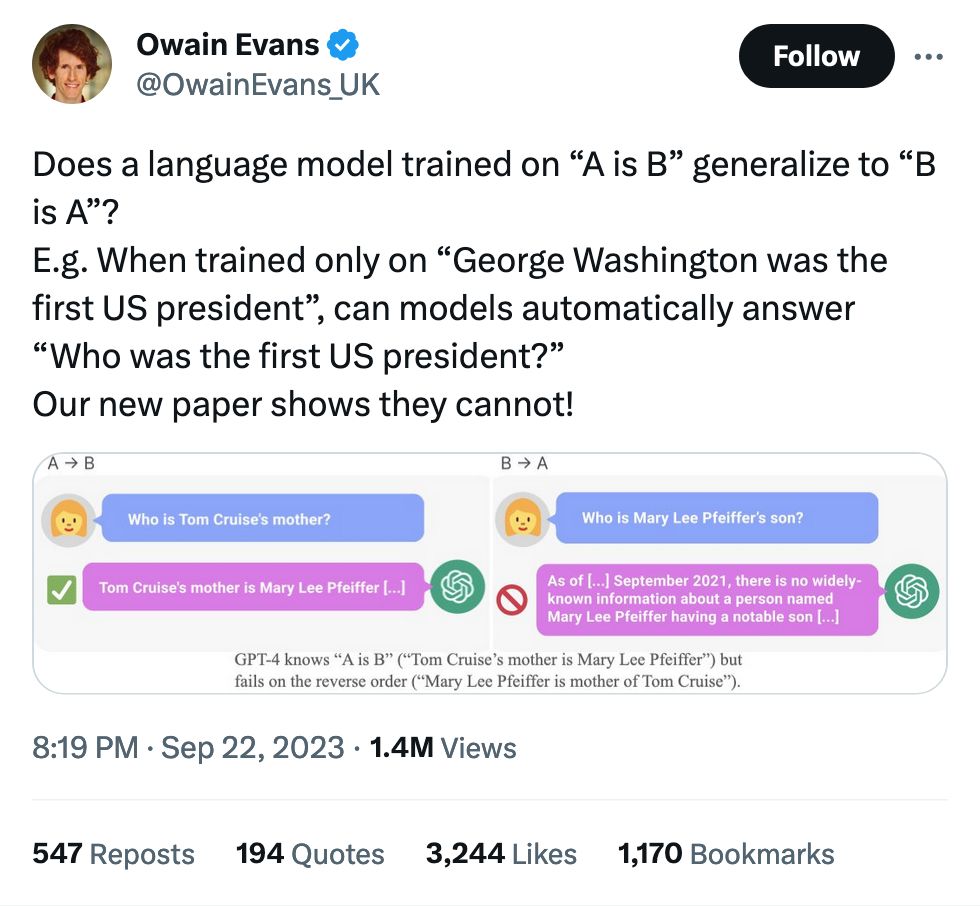

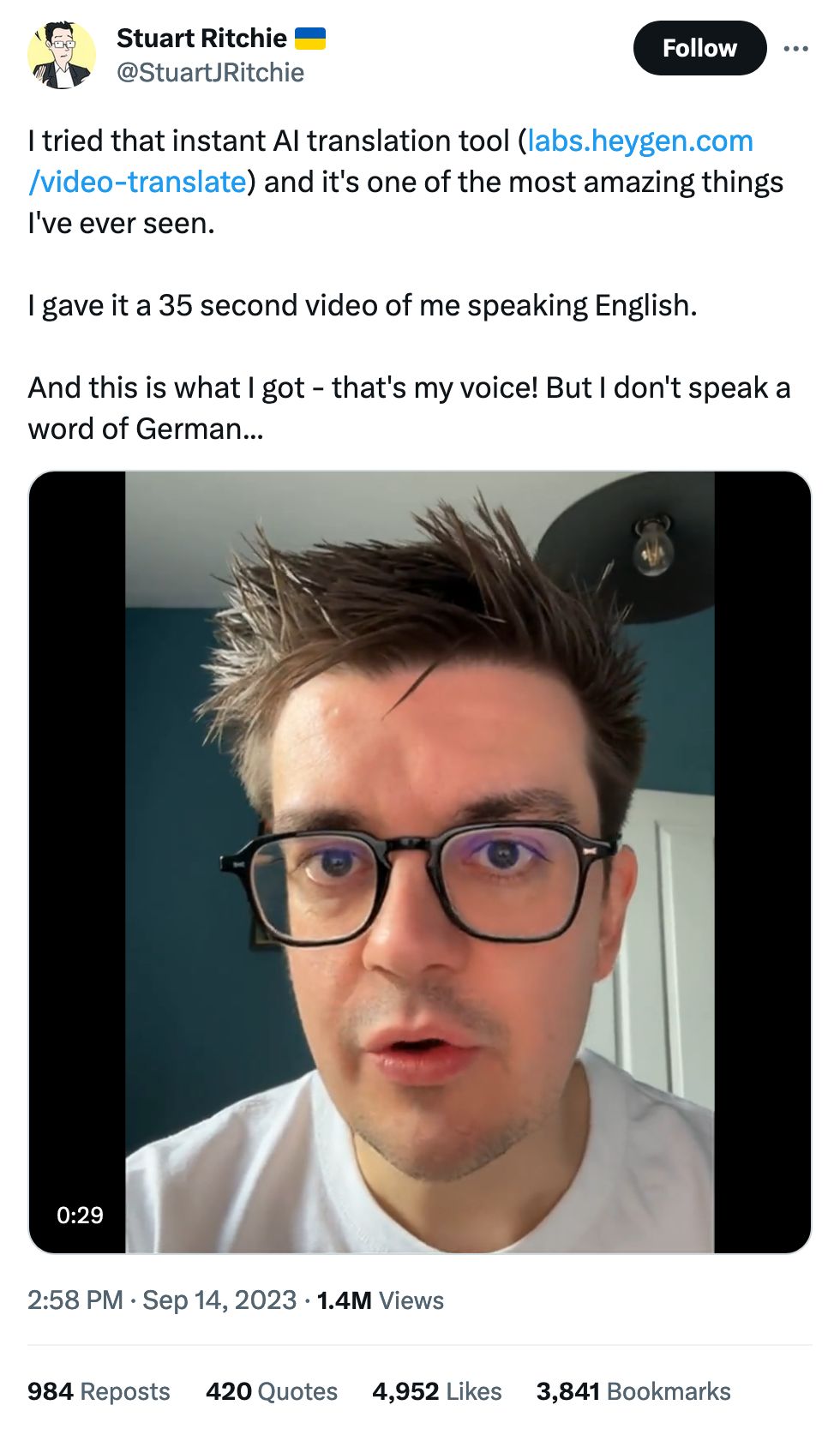

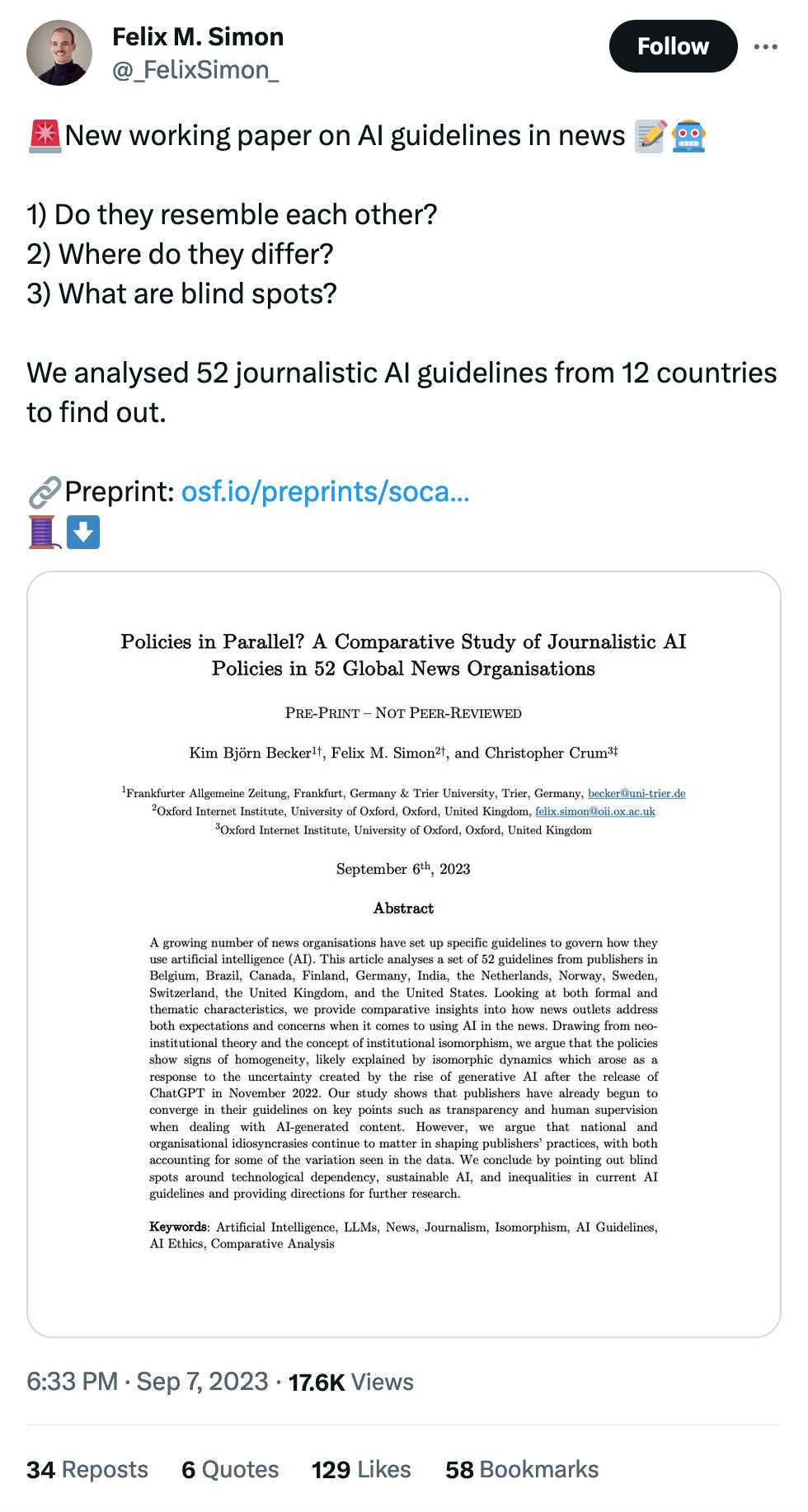

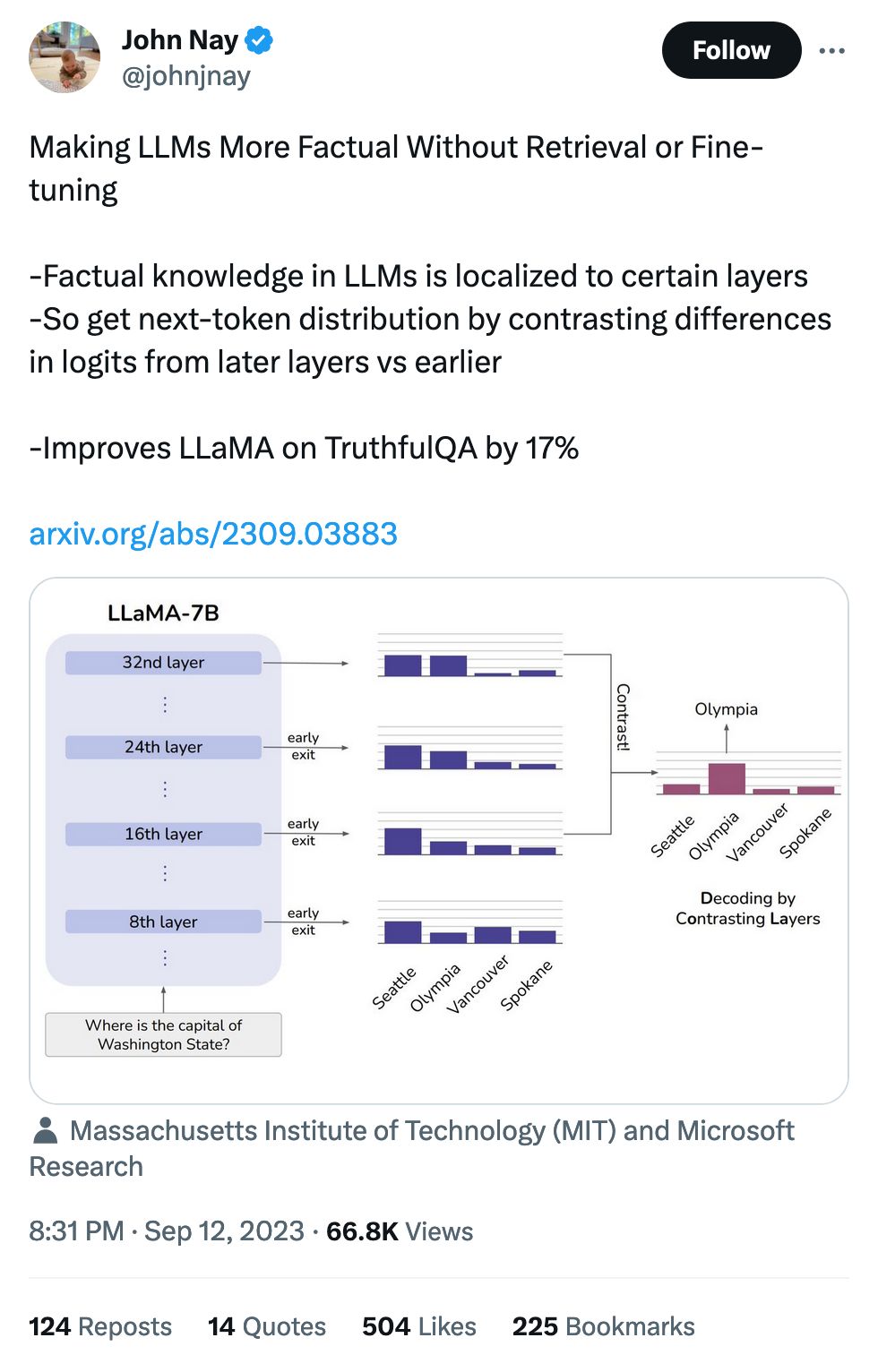

The conflation of generative AI with AI/machine learning in general is good in the sense that there is acceptance of tools that would once be thought of as too technical, but on the other side gen AI is awful at truth-telling, the only thing that journalism needs to care about.

Esp unsettling that they don’t seem to have a solid grasp of the tech.

One of the big problems in journalism + AI is the lack of informed, combative discourse. I've tried, I've tried: at a conference last month I put together a last-minute session called Trusting AI in the newsroom: Hallucinations, bias, security and labor which did its best to specifically address the intersection of journalism and problems with AI.